The Technology In Amazon Alexa – The Tech Behind Series

Jerry Wallis

23 min read

Amazon Alexa is a recent digital innovation that has made our lives much easier. Anyone who has an Alexa-enabled device knows the convenience of hands-free voice commands. But most people don’t know the technology that makes this possible. Voice control or voice assistance is not a new technology in digital products. Still, Alexa has taken things to a new level allowing the possibility of controlling almost everything in your home with your voice.

When Amazon released the Echo Smart Speaker in November 2014, they had no idea of the revolutionary impact their device would have on society. The Echo was an Alexa-enabled speaker that could control other devices in the home and play music. The technology that made the Echo Speaker special was the in-built voice recognition software that allowed users to speak to their devices and provide instructions without ever having to touch or press a button.

Amazon has since released a series of Echo models, each with unique features. But at its core, thanks to Alexa, the Echo is still a voice-activated assistant that can answer your questions, play music, control your smart home devices, and more.

While that’s all well and good, have you ever wondered exactly how this voice assistant technology works? How does Alexa understand you and your commands to assist you?

We’ll dive into exactly that and much more about what makes Alexa do its thing. We will also discuss some features that make Amazon Alexa so popular. By the end of this post, you will better understand how this technology works and why it is so popular. This is the tech behind Amazon Alexa!

What Is Amazon Alexa & What Does It Do? 🔊

Amazon Alexa is Amazon’s cloud-based conversational AI and voice-controlled assistant, allowing users to interact with the Amazon Echo smart speaker. Don’t mistake it for the Amazon Echo, a Wi-Fi-connected speaker that houses Amazon’s artificial intelligence voice assistant, Alexa.

Alexa’s voice assistance technology is used across several of Amazon’s products, including the aforementioned Echo and Echo Dot, as well as Tap, FireTV and hundreds of other third-party affiliated products.

Alexa was first released in 2014 and has become one of the most popular voice-activated assistants on the market. Amazon Alexa can perform various tasks but is mainly used for playing music and answering questions. Most Alexa-enabled devices, such as the Echo, can make hands-free calls, set alarms and timers, check the weather, and much more. You can even use Alexa to control your smart home devices, such as lights and thermostats.

In 2018, Amazon released the Echo Dot, a smaller and more affordable version of the original Amazon Echo. The Amazon Echo Dot has been very popular, selling out in many stores primarily down to the Alexa-enabled specific features. This is why Amazon has plans to release even more products that feature Amazon Alexa in the future.

One of its most significant strengths comes in the fact that Amazon is constantly being updated with new skills and new features. Plus, Alexa is always getting more intelligent thanks to its machine learning capabilities, which we will lean more into later. For instance, the more you use Amazon Alexa, the more it will become familiar with your preferences over time and provide you with personalised recommendations.

Lastly, Alexa is available in various languages, including English, Spanish, French, Italian, and German. If you’d like to learn about a few more cool things you can do with the virtual assistant, check out the video below. We’re sure you’ll learn a few new things after watching this, just like we did.

How Does Alexa Work? 🤔

“Alexa, turn on the lights.” The kitchen lights, which are WiFi enabled, turn on after that. Alexa fetches information from you and directs it to the hardware device, which is reflected in the tangible output. Sounds simple, right? Everything happening in the background is far from simple, to say the least.

For instance, Amazon Echo records your voice commands via its built-in microphone. The device is always connected to the internet and sends the audio recording to Alexa Voice Service (AVS). AVS understands the recording and converts it into the appropriate commands. The device’s computing system then searches for the “kitchen light” in the list of smart home appliances registered along with the relevant “skill” – more on this is coming up. After that, it then sends the suitable output back to the device, in this case by switching on the smart light labelled as “kitchen light”.

Now that we have a good starting point about Alexa’s inner workings let’s take an in-depth look at all the technical pieces driving it from behind the scenes. Then, it’s time to uncover the hidden mechanics behind its magic!

The Tech Behind Amazon Alexa 🖥️

As we’ve already established, Amazon Alexa is a voice-controlled assistant capable of performing various tasks of varying difficulty levels, from playing music to ordering a pizza. But how does Alexa do all that?

🗣 Wake Word Detection

It all starts with the trigger. At the heart of every Alexa-enable device, such as the Amazon Echo, is a small computer constantly listening for the “wake word” – in most cases, “Alexa.”

Once the wake word is detected, the audio recording of your voice command is sent to Amazon’s servers, where it is processed further. The reason is that the device’s CPU power is nowhere near enough to handle the output space required – this is something only the cloud’s seemingly limitless scalability can handle. The server then looks up and analyses the audio using speech recognition software and converts it into text format before commanding Alexa to respond accordingly.

📡 Signal Processing

In addition to the server-side processing power, the Alexa-enabled device also contains several sensors that help them to hear and understand your voice commands. Together, these components allow Alexa to accurately interpret your voice commands and fulfil your requests.

Amazon Alexa’s sensors include four microphones, an acoustic resonator, and a processor that handles Natural Language Understanding (NLU) – we’ll delve deeper into this very soon. These collectively aim to enhance the input signal, i.e. your audio instructions, by minimising background noise and cleaning the audio, achieved through signal modification and synthesisation. This overall process is called “signal processing”.

The main idea behind signal processing is to identify ambient noise like pets or TV and minimise them to enhance the clarity of the actual command. The microphones and sensors identify the source of the signal so that the device can focus entirely on it. Acoustic echo cancellation techniques reduce unwanted signals and concentrate solely on the remaining important ones.

But that’s not the end of it, is it? Again, we are just scratching the surface here, and soon you’ll learn why.

🤖 Machine Learning For Continual Improvement

Data and machine learning are the foundation of Alexa’s capabilities, and the good thing is it will be more valuable and accurate as the amount of data it gathers increases. Long story short, if the science of machine learning and data science didn’t make leaps and bounds in the past decade or so, a voice-activated interface such as Alexa would still just be wishful thinking.

Communication between humans and machines may look ordinary now, but the internal processes that enable this interaction suggest otherwise. For instance, did you ever realise that there were multiple bouts of back-and-forth interaction between your Echo and the Amazon servers whenever you asked Alexa about the weather?

Every time Alexa makes a mistake or sends an incorrect response in interpreting your request, that data is used to make the system smarter the next time around. And this technology is not just exclusive to Alexa. Machine learning is the reason for the rapid improvement in the capabilities of voice-activated user interfaces. Take Google speech, for example; it improved its error rate tremendously in a year, as it now recognises 19 out of 20 words it hears.

Understanding natural human speech is a massive problem for devices, but the fantastic thing is that we now have the computing power at our fingertips to improve it the more we use it.

🔠 Natural Language Processing (NLP)

Natural Language Processing is converting human speech into words, sounds and ideas. It’s a subset of artificial intelligence and computational linguistics, handing interactions between machines and the natural languages of humans in which computers are entailed to analyse, understand, alter, or generate natural language.

So, how does it work with Amazon’s devices? At the start, the system receives natural language input, any language that has evolved naturally in humans through use and repetition without conscious planning.

After this, speech recognition converts the input language into artificial language. Here, the data is transformed into a textual form which NLU (Natural Language Understanding) processes to understand the meaning. NLU can be considered as a subset of NLP, which refers to the machine’s processing ability to understand our language and what we say.

Each request that Alexa receives is broken down into individual speech units and compared with a database to find the closest word matches. On top of that, the software has to identify sentence structure and terms relevant to different subsystems.

As an example, if Alexa notices multiple words that are interlinked with each other such as “food”, “delivery”, “pizza”, or “orders”, then it would most likely open a food delivery or restaurant app.

This is the main reason Alexa can distinguish between accents and dialects. There are unique databases for each language Amazon supports, including regional variations, and users need to select them in the Alexa app if their device doesn’t ship with them preloaded.

With natural language understanding (NLU), computers can deduce what a speaker means, not just the words they say. It enables voice assistance technology like Alexa to infer that you’re probably asking for a local weather forecast when you ask, “Alexa, what’s it like outside?

NLU is based on the concept of artificial intelligence, and it plays a role in voice technology by recognising patterns and meanings in human language.

Lastly comes Natural Language Generation (NLG), which converts structured data into text that mimics human conversations. Once the command’s intent and the correct order are identified, the output will be fulfilled via the internet. Amazon Alexa will then respond accordingly using NLG. You can think of NLG as a writer who can turn inputted data into language that can be communicated.

All in all, voice-control AI is appealing not just because of its usefulness and convenience but also due to the natural user experience – talking is what we’ve been doing for centuries, not swiping or texting. This is a big reason this technology is so complicated to build and advance. Just think how non-linear our typical conversations are, with ever-changing topics, people interrupting each other, and not to mention body language to add meaning to what they are saying.

What Is The Alexa-Echo System Composed Of? ⚙️

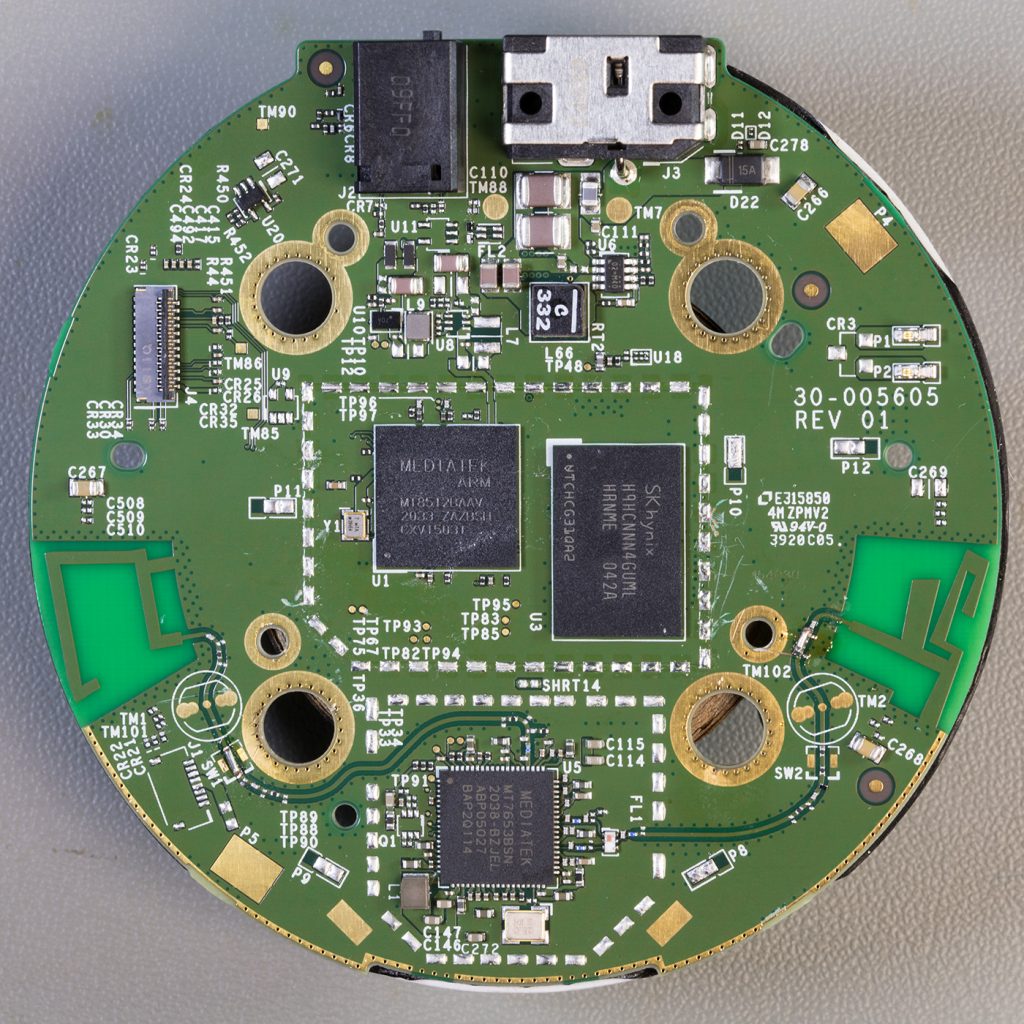

Image courtesy: briandorey.com

The Echo units, which house the voice assistant Alexa, have two main “modes.” The first is a minor firmware chip wired to the microphone that only contains about 50-60k onboard memory. Its only purpose is to listen to the wake word, “Alexa,” “Echo,” etc. It doesn’t do any actual language processing for this but only listens for distinct combinations of syllables.

Once the firmware chip hears the wake word, it powers up the main ARM chip that runs a stripped-down version of Linux. This startup process takes just under a second. Unfortunately, during this time, the firmware chip barely has enough memory to buffer what you’re saying if you immediately start talking after the wake word without pausing.

Once the ARM chip is on, the blue ring on the top illuminates, and recording begins. The firmware chip dumps its buffer to the start of the recording and then serves as a pass-through for the mic.

Only this main ARM chip and operating system (OS) can access the networking interface, in or out. So the purpose of this next stage is to wait until it’s heard what sounds like an actual natural sentence or question.

Amazon is not interested in background noise – that would be a waste of bandwidth and resources. So instead, a rudimentary natural language processing step is done locally to determine when you’ve said an actual sentence and stopped speaking. It also handles straightforward “local” commands that don’t need server processing, like “Alexa, stop.” Only at that point is the whole sentence sent up to the actual AWS servers for processing.

It is physically impossible for the device to constantly listen secretly, as the mic, networking, main wake chip, blue LED ring, and main ARM chip aren’t wired that way from a power perspective.

If you want to confirm any of the above, try disconnecting your home internet and playing around with Alexa. You’ll see that it only realises something is wrong at that last step when uploading the processed sentence to the servers.

How Does Amazon Alexa Understand You? 🤷

Well, for one, it’s not through the hardware containing it. For example, if you think the Echo Speakers play a role in all of this, then you’d be wrong, as they don’t have the space nor the technological complexity to operate machine learning systems within.

It all comes down to an intricate combination of cloud computing and Amazon’s voice recognition service doing its magic. When you ask your Alexa-enabled device to do something, it records what you’re saying and sends it to Amazon’s voice recognition service.

This service, known as Alexa Voice Service (AVS), figures out what you’re asking for, quickly sends the correct information back to your device and performs the requested output, i.e. playing a song or answering a question.

The voice recognition computing tech inside the device doesn’t do much more than identify the one or two specific trigger words they’re programmed to listen to. Instead, all the actual computation is handled in Amazon’s cloud servers, which convert the received audio into text using speech recognition technology to understand the command at a machine level.

Unlocking the complex sequence of words in real time can take time and effort. Fortunately, a decoder is up to the task! It determines which string of words makes the most sense by evaluating two pieces—a prior and the acoustic model. The former draws upon existing texts while disregarding features, whereas deep learning simultaneously powers the latter as it considers audio alongside recorded transcripts. With this combination and fast computation applied alongside dynamic coding, deciphering speech happens in real-time and almost in the blink of an eye.

💬 Automatic Speech Recognition (ASR) Helps Alexa Understand Us

As we’ve touched upon several times already, speech recognition technology enables Amazon Alexa to convert input audio into text form, facilitating the required end-response from the output device. Through ASR, spoken words are recognised as words, allowing computers to finally understand us through our primary means of communication – speech.

With ASR technology, computers can detect patterns in audio waves, match them with a library of sounds in a given language, and, thus, identify what we’ve said. As a result, today’s voice recognition service is more intuitive than ever. For example, Amazon Alexa can understand different languages and accents and even tell you when you’re mumbling or thinking out loud based on a few ooh’s and um’s.

Breaking Down A Typical Command To Alexa 🔎

When you ask Alexa to do something or answer a question, you ask something like this, “Alexa, what is the weather like today?” This command can be categorised into three parts:

⏰ Wake Word

As we already know by now, the wake word is the trigger word that activates the Alexa-enabled device to start listening to your input command, i.e. voice. In the case of our example above, the wake word is “Alexa”, but it can also be “Echo” or “Computer”, depending on the device or specific wake settings you’re dealing with.

🔧 Invocation

Invocation is the term that is used to invoke Alexa’s unique skills. Users can invoke an Alexa skill with the invocation name for a custom skill to ask a question or with a name-free interaction.

The Alexa command, in our example, is a name-free invocation, whereas the command, “Alexa, Ask Weather App for today’s weather”, includes a custom skill name for invocation.

All this points to the fact that an invocation name is only needed for custom skills.

📣 Utterance

Utterance is the specific phrase of your command or the request you want Alexa to fulfil. This opens the door for multiple variations of the same request. Coding utterances means unpacking myriad subtle variations to communicate what people are likely asking effectively.

A great skill requires thought and creativity: predicting every variation users could say when making requests, like wanting to know the time. Crafting meaningful conversational experiences is an art that demands both knowledge and insight into how humans interact with technology.

Where Does Amazon Alexa Get Information From? 🌐

Well, we have established that every voice request goes to Amazon’s servers, but how does Alexa get the information to relay back? This depends significantly on the proposition that Alexa receives and has to fulfil. Requests that Amazon has thought of ahead of time will most likely use an API or another built-in program to figure out the answer.

The AVS will draw on the OpenWeatherMap API to get your weather information if you ask for the weather. It will then synthesises a voice recording for Alexa in the cloud server, which is sent back to be played over your Echo’s speaker.

The AVS servers will handle more straightforward requests like mathematical calculations, but more complicated ones will be handled through search engines. This is where you’ll come across “here’s what I’ve found on the internet”. Alexa will check in with you following that to confirm the effectiveness of that output to improve its usefulness continually.

Should You Worried From A Privacy Perspective? 🕵🏼♂️

For the most part, no. That’s because the device is only activated after hearing the trigger word. Anything you say before that is not recorded and, thus, not sent to Amazon’s servers.

That said, problems can arise when the device inadvertently gets activated when it hears something similar to the trigger word. This is when it listens and records everything around you without your knowledge.

However, no need to worry as accidental activations happen now and then and everything sent as such will be marked as a mistake if there is no request to fulfil. If that still doesn’t address your concerns, then it’s essential to know that every recording made by an Alexa-enabled device will be saved to your account.

You can access your recordings via the Amazon website or your Alexa app from the Privacy menu in the Settings tab. Here, you’ll be able to review your recording history, listen back to the recordings, and even delete any that you’re not happy with.

You can even delete huge chunks by date or all at once. There’s also the option to implement a safety measure with your voice by saying, “delete what I just said“, or “delete everything I said today.”

👁️🗨️ How Alexa’s Privacy System Works

Alexa enabled-devices are designed to protect your privacy because every device has features that give you transparency and control over your data.

For example, when you want to talk to Alexa, you must first wake up the device by using the “wake word” or pressing the action button. Then you ask Alexa something like, “what’s the weather today in Sydney?” and she’ll respond, “it’s 18 degrees and partly cloudy.” Only then will your device begin sending requests to Amazon’s cloud to be processed and safely stored.

This means unless the device detects the way port, nothing is recorded. But how do you know when your echo device sends your request to the Amazon Cloud? Every echo device has an indicator like a blue light or an audible tone that lets you know when your request is being recorded and sent to the Amazon Cloud servers.

Amazon Echo smart speakers are built with a microphone “off” button that electronically disconnects the microphone. On Echo devices with a screen, however, you can turn off the camera and the microphone with just a single button. When the button is pressed, your device cannot send the request to the cloud even when you use the wake word.

You can slide in the built-in shutter on some smart displays to cover the camera for additional peace of mind. When you factor in the aforementioned recording storage, it is clear that Alexa-enabled devices have multiple layers of protection that ensure everything you want to stay private stays private.

What Can Developers Do With Alexa? 👨💻

With Alexa, developers can build customised voice AI experiences that offer users a more immersive and intuitive user experience. Alexa’s developer platform provides tools, APIs, reference solutions, and relevant documentation to make it easier to build customised technological solutions using Alexa.

By creating new Alexa skills, you can unlock the potential of over 100 million Alexa-enabled devices worldwide to connect with your customers and engage them in a whole new way. Developers can build innovative skills using the Alexa Skills Kit to leverage the power of voice recognition. Businesses should take this opportunity to tap into an ever-growing customer base by creating unique experiences for users that they can enjoy entirely with just their voice.

Next up, developers can use Alexa for business to add voice control features to their workplace and enterprise applications. In addition, Alexa’s Developer platform also offers a particular offering for the hospitality industry, through which businesses can run more smoothly with voice-activation features – allowing guests to enjoy the services they provide, for example, quickly.

🎯 Extend Alexa’s Usability And Usefulness With Alexa Skills

Alexa can make life easier by allowing users to check the news or play a game with their voice! The Alexa Skills Kit (ASK) is what makes this possible. ASK provides developers with all the tools necessary for creating content – called skills – that gives users an interactive, hands-free way of interacting with their virtual assistant.

Skills are like apps for Alexa. However, these skills mainly control different smart devices that Alexa cannot contain within herself internally. So, essentially, you are adding a new layer of functionality to Alexa by adding additional skills to her proverbial “skillset”.

In simpler terms, skills are abilities that Alexa can gain to do new things that the device cannot do on its own but can learn by acquiring new skills. So, for example, if you know how to play the guitar or drive a bike, that’s a new skill you’ve picked up. That’s the same basic concept with Alexa skills as well.

The great thing is developers can build their skills for Alexa-enabled devices using the Alexa Skills Kit (ASK) or by integrating into an existing smart device through the Alexa Skill Management API (SMAPI) via the Alexa Voice Service. With ASK, you can create a new skill tailored to your specifications. Your imagination is the limit – from game skills to music and beyond! Or one of the pre-built voice interaction models will meet all your needs. No matter what type of experience you have in mind, it’s within reach with ASK.

A typical Skill Development workflow goes something like this:

✍ Skill Design

Amazon recommends that Alexa skills be designed to be user-centric, complimenting attractive visual interfaces. Designers must approach their designs with voice-first interactions adapted to how end-users would interact through speech. Alexa-enabled devices with a screen require a design prototype containing a visual design and the voice interaction user flows.

🏗️ Skill Development

When building Alexa skills, developers can either follow one of the pre-built voice interaction models or a custom voice interaction model.

Suppose you want to create a skill using one of the pre-built voice interaction models. In that case, you’ll have to start by provisioning your skill onto Amazon Web Services (AWS) Lambda, which requires both an AWS account and an Amazon developer account. Then, depending on which language you plan to use for coding your skill – Node.js, Java, Python, C# Go., Ruby or PowerShell – you’ll need corresponding development environment tools to correctly author that Lambda Function.

To build a skill with a custom voice interaction model, you can use the Alexa-hosted option provided by ASK for custom skills to create store and host your skill and resources on AWS. Or, you can arrange your backend development resources on AWS and host your skill as an AWS Lambda function accordingly.

You’ll also need a development environment appropriate for the programming language of your choice. For example, if you choose to author a Lambda function, Node.js, Java, Python, C# Go., Ruby or PowerShell would be your options. However, if your device uses the Alexa-hosted skill option, you can code in either Node.js or Python.

🧪 Skill Testing

Testing the skill without a device is possible using the Alexa simulator in the developer console or Visual Studio Code. Following the skill-testing recommendations and requirements is essential before submitting for certification. You also have the option to beta-test your skill with a small testing group before launching to the world.

📝 Certify and Publish Your Skill

Amazon will certify the skill against its quality, security, and policy guidelines before deeming it fit or otherwise for publishing in the Alexa Skills Store.

📈 Skill Monitoring and Optimisation

Finally, after publishing your skill, you can always check it for usage and relevant operational analytics and view earnings in the developer console.

Final Takeaway 🧐

Through a unique blend of voice recognition, natural language processing, artificial intelligence, and machine learning, Alexa can interpret voice commands and perform them accordingly.

Now you’re in the know! With Amazon Alexa & Alexa-enabled devices, technological magic is at your fingertips. From requesting a song to ordering groceries, tech prowess takes over – so fast that it’s like having an extra pair of hands around. Enjoy the convenience and power of Alexa henceforth, knowing just how much is going on in the background!

Amazon Alexa is just one of many in the long list of great examples of what technological innovation primarily has to offer – making people’s lives easier. If you’d like to do the same for your customers and strategically incorporate technology into your business, feel free to reach out and chat with us. We’d love to hear you out and discuss everything we can to help improve your customer experience.

Topics

Published On

December 01, 2022